fxizel

New Member

Good day,

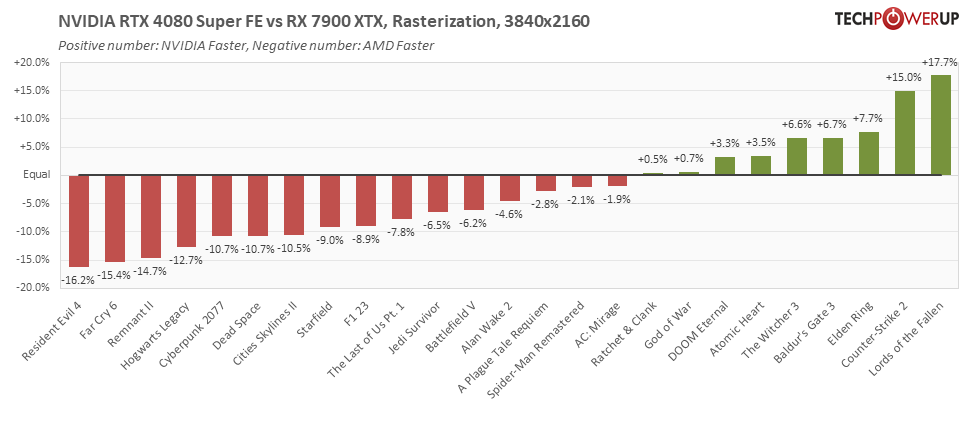

I am going to be purchasing a gaming PC soon and need some advice, I am stuck between the 4080 Super and the 7900 XTX, i've heard a lot of good things about both but I can't seem to decide which one. I mainly play Call Of Duty but I'd also like this to be a little bit of a work machine, I work in tech as a software engineer (I know, ironic that I can't choose).

Any tips would be great!

I am going to be purchasing a gaming PC soon and need some advice, I am stuck between the 4080 Super and the 7900 XTX, i've heard a lot of good things about both but I can't seem to decide which one. I mainly play Call Of Duty but I'd also like this to be a little bit of a work machine, I work in tech as a software engineer (I know, ironic that I can't choose).

Any tips would be great!